Probabilistic Paradigm#

Initial Set-Up#

import pymc as pm

SAMPLES = 100

Predecessor and Successor#

def predecessor(a: int) -> int:

with pm.Model():

interim_variable = pm.DiracDelta("a", a)

result = pm.Deterministic("result", interim_variable - 1)

data = pm.sample(SAMPLES)

mean_result = data.posterior.result.mean()

return int(mean_result.values)

assert predecessor(1) == 0

assert predecessor(10) == 9

Only 100 samples in chain.

Sequential sampling (2 chains in 1 job)

Metropolis: [a]

Interrupted

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/pytensor/compile/function/types.py:970, in Function.__call__(self, *args, **kwargs)

968 try:

969 outputs = (

--> 970 self.vm()

971 if output_subset is None

972 else self.vm(output_subset=output_subset)

973 )

974 except Exception:

TypeError: expected type_num 1 (NPY_INT8) got 7

During handling of the above exception, another exception occurred:

TypeError Traceback (most recent call last)

Cell In[2], line 11

7 mean_result = data.posterior.result.mean()

8 return int(mean_result.values)

---> 11 assert predecessor(1) == 0

12 assert predecessor(10) == 9

Cell In[2], line 5, in predecessor(a)

3 interim_variable = pm.DiracDelta("a", a)

4 result = pm.Deterministic("result", interim_variable - 1)

----> 5 data = pm.sample(SAMPLES)

7 mean_result = data.posterior.result.mean()

8 return int(mean_result.values)

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/pymc/sampling/mcmc.py:685, in sample(draws, tune, chains, cores, random_seed, progressbar, step, nuts_sampler, initvals, init, jitter_max_retries, n_init, trace, discard_tuned_samples, compute_convergence_checks, keep_warning_stat, return_inferencedata, idata_kwargs, callback, mp_ctx, model, **kwargs)

683 _log.info(f"Sequential sampling ({chains} chains in 1 job)")

684 _print_step_hierarchy(step)

--> 685 _sample_many(**sample_args)

687 t_sampling = time.time() - t_start

689 # Packaging, validating and returning the result was extracted

690 # into a function to make it easier to test and refactor.

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/pymc/sampling/mcmc.py:827, in _sample_many(draws, chains, traces, start, random_seed, step, callback, **kwargs)

811 """Samples all chains sequentially.

812

813 Parameters

(...)

824 Step function

825 """

826 for i in range(chains):

--> 827 _sample(

828 draws=draws,

829 chain=i,

830 start=start[i],

831 step=step,

832 trace=traces[i],

833 random_seed=None if random_seed is None else random_seed[i],

834 callback=callback,

835 **kwargs,

836 )

837 return

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/pymc/sampling/mcmc.py:900, in _sample(chain, progressbar, random_seed, start, draws, step, trace, tune, model, callback, **kwargs)

898 sampling = sampling_gen

899 try:

--> 900 for it, diverging in enumerate(sampling):

901 if it >= skip_first and diverging:

902 _pbar_data["divergences"] += 1

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/fastprogress/fastprogress.py:50, in ProgressBar.__iter__(self)

48 except Exception as e:

49 self.on_interrupt()

---> 50 raise e

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/fastprogress/fastprogress.py:41, in ProgressBar.__iter__(self)

39 if self.total != 0: self.update(0)

40 try:

---> 41 for i,o in enumerate(self.gen):

42 if self.total and i >= self.total: break

43 yield o

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/pymc/sampling/mcmc.py:968, in _iter_sample(draws, step, start, trace, chain, tune, model, random_seed, callback)

966 if i == tune:

967 step.stop_tuning()

--> 968 point, stats = step.step(point)

969 trace.record(point, stats)

970 log_warning_stats(stats)

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/pymc/step_methods/arraystep.py:100, in ArrayStepShared.step(self, point)

97 var_dict = {cast(str, v.name): point[cast(str, v.name)] for v in self.vars}

98 q = DictToArrayBijection.map(var_dict)

--> 100 apoint, stats = self.astep(q)

102 if not isinstance(apoint, RaveledVars):

103 # We assume that the mapping has stayed the same

104 apoint = RaveledVars(apoint, q.point_map_info)

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/pymc/step_methods/metropolis.py:273, in Metropolis.astep(self, q0)

271 q = q_temp

272 else:

--> 273 accept_rate = self.delta_logp(q, q0d)

274 q, accepted = metrop_select(accept_rate, q, q0d)

275 self.accept_rate_iter = accept_rate

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/pytensor/compile/function/types.py:983, in Function.__call__(self, *args, **kwargs)

981 if hasattr(self.vm, "thunks"):

982 thunk = self.vm.thunks[self.vm.position_of_error]

--> 983 raise_with_op(

984 self.maker.fgraph,

985 node=self.vm.nodes[self.vm.position_of_error],

986 thunk=thunk,

987 storage_map=getattr(self.vm, "storage_map", None),

988 )

989 else:

990 # old-style linkers raise their own exceptions

991 raise

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/pytensor/link/utils.py:535, in raise_with_op(fgraph, node, thunk, exc_info, storage_map)

530 warnings.warn(

531 f"{exc_type} error does not allow us to add an extra error message"

532 )

533 # Some exception need extra parameter in inputs. So forget the

534 # extra long error message in that case.

--> 535 raise exc_value.with_traceback(exc_trace)

File ~/.local/share/virtualenvs/understanding-programming-paradigms-RqbJTeHH/lib/python3.10/site-packages/pytensor/compile/function/types.py:970, in Function.__call__(self, *args, **kwargs)

967 t0_fn = time.perf_counter()

968 try:

969 outputs = (

--> 970 self.vm()

971 if output_subset is None

972 else self.vm(output_subset=output_subset)

973 )

974 except Exception:

975 restore_defaults()

TypeError: expected type_num 1 (NPY_INT8) got 7

Apply node that caused the error: Elemwise{Composite}(Reshape{0}.0, TensorConstant{1}, TensorConstant{0}, TensorConstant{-inf}, Reshape{0}.0)

Toposort index: 2

Inputs types: [TensorType(int8, ()), TensorType(int8, ()), TensorType(int8, ()), TensorType(float32, ()), TensorType(int8, ())]

Inputs shapes: [(), (), (), (), ()]

Inputs strides: [(), (), (), (), ()]

Inputs values: [array(1), array(1, dtype=int8), array(0, dtype=int8), array(-inf, dtype=float32), array(0)]

Outputs clients: [['output']]

HINT: Re-running with most PyTensor optimizations disabled could provide a back-trace showing when this node was created. This can be done by setting the PyTensor flag 'optimizer=fast_compile'. If that does not work, PyTensor optimizations can be disabled with 'optimizer=None'.

HINT: Use the PyTensor flag `exception_verbosity=high` for a debug print-out and storage map footprint of this Apply node.

def successor(a: int) -> int:

with pm.Model():

interim_variable = pm.DiracDelta("a", a)

result = pm.Deterministic("result", interim_variable + 1)

data = pm.sample(SAMPLES)

mean_result = data.posterior.result.mean()

return int(mean_result.values)

assert successor(0) == 1

assert successor(10) == 11

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

Metropolis: [a]

100.00% [4400/4400 00:04<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 27 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

Metropolis: [a]

100.00% [4400/4400 00:04<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 27 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Addition#

def addition(addend_1: int, addend_2: int) -> int:

with pm.Model():

interim_addend_1 = pm.DiracDelta("a1", addend_1)

interim_addend_2 = pm.DiracDelta("a2", addend_2)

result = pm.Deterministic("result", interim_addend_1 + interim_addend_2)

data = pm.sample(SAMPLES)

mean_result = data.posterior.result.mean()

return int(mean_result.values)

assert addition(0, 0) == 0

assert addition(1, 0) == 1

assert addition(0, 1) == 1

assert addition(10, 10) == 20

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [a1]

>Metropolis: [a2]

100.00% [4400/4400 00:06<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 27 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [a1]

>Metropolis: [a2]

100.00% [4400/4400 00:04<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 27 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [a1]

>Metropolis: [a2]

100.00% [4400/4400 00:04<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 23 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [a1]

>Metropolis: [a2]

100.00% [4400/4400 00:04<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 24 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Multiplication#

def multiplication(multiplicand: int, multiplier: int) -> int:

with pm.Model():

interim_multiplicand = pm.DiracDelta("m1", multiplicand)

interim_multiplier = pm.DiracDelta("m2", multiplier)

result = pm.Deterministic("result", interim_multiplicand * interim_multiplier)

data = pm.sample(SAMPLES)

mean_result = data.posterior.result.mean()

return int(mean_result.values)

assert multiplication(0, 0) == 0

assert multiplication(2, 0) == 0

assert multiplication(0, 2) == 0

assert multiplication(10, 10) == 100

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [m1]

>Metropolis: [m2]

100.00% [4400/4400 00:05<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 26 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [m1]

>Metropolis: [m2]

100.00% [4400/4400 00:04<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 24 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [m1]

>Metropolis: [m2]

100.00% [4400/4400 00:05<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 25 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [m1]

>Metropolis: [m2]

100.00% [4400/4400 00:05<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 27 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Exponentiation#

def exponentiation(base: int, exponent: int) -> float:

with pm.Model():

interim_base = pm.DiracDelta("b", base)

interim_exponent = pm.DiracDelta("e", exponent)

result = pm.Deterministic("result", interim_base ** interim_exponent)

data = pm.sample(SAMPLES)

mean_result = data.posterior.result.mean()

return int(mean_result.values)

assert exponentiation(1, 0) == 1

assert exponentiation(0, 1) == 0

assert exponentiation(3, 3) == 27

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [b]

>Metropolis: [e]

100.00% [4400/4400 00:05<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 27 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [b]

>Metropolis: [e]

100.00% [4400/4400 00:05<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 27 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

Only 100 samples in chain.

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Metropolis: [b]

>Metropolis: [e]

100.00% [4400/4400 00:04<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 100 draw iterations (4_000 + 400 draws total) took 28 seconds.

w:\Repositories\understanding-programming-paradigms\.venv\lib\site-packages\arviz\stats\diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

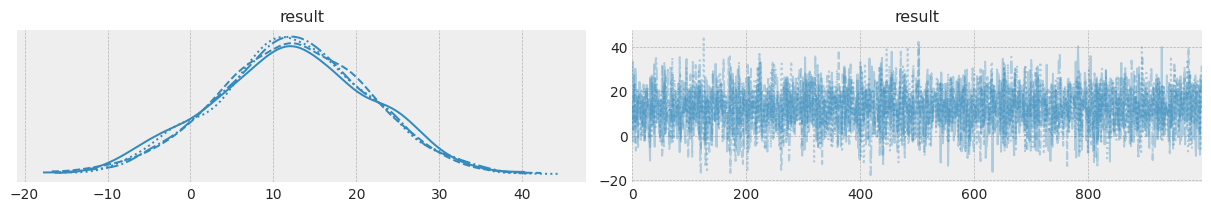

What is particular of the Probabilistic Paradigm?

men_height_mean = 175.768

men_height_std = 6.7564

women_height_mean = 163.322

women_height_std = 6.5532

with pm.Model():

men_height = pm.Normal("Height_{man}", mu=men_height_mean, sigma=men_height_std)

women_height = pm.Normal("Height_{woman}", mu=women_height_mean, sigma=women_height_std)

difference = pm.Deterministic("result", men_height - women_height)

data = pm.sample()

mean_result = data.posterior.result.mean()

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [Height_{man}, Height_{woman}]

100.00% [8000/8000 00:10<00:00 Sampling 4 chains, 0 divergences]

Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 34 seconds.

import arviz as az

az.style.use("bmh")

az.plot_trace(data, var_names=["result"])

array([[<AxesSubplot: title={'center': 'result'}>,

<AxesSubplot: title={'center': 'result'}>]], dtype=object)

threshold = 35

probability = float((data.posterior.result > threshold).mean().values)

print(f"There is a probability of {probability:.2%} that the difference is larger than {threshold}cm")

There is a probability of 0.62% that the difference is larger than 35cm